Rate Limiting

Here is a list of popular rate-limiting algorithms:

- Token bucket

- Leaking bucket

- Fixed window counter

- Sliding window log

- Sliding window counter

Token bucket

used by amazon(e-commerce) and stripe (payments)

Pros:

- The algorithm is easy to implement.

- Memory efficient.

- Token bucket allows a burst of traffic for short periods. A request can go through as long

as there are tokens left.

Cons:

- Two parameters in the algorithm are bucket size and token refill rate. However, it might be challenging to tune them properly.

Leaking bucket

The leaking bucket algorithm is similar to the token bucket except that requests are processed

at a fixed rate. It is usually implemented with a first-in-first-out (FIFO) queue. The algorithm

works as follows:

- When a request arrives, the system checks if the queue is full. If it is not full, the request

is added to the queue. - Otherwise, the request is dropped.

- Requests are pulled from the queue and processed at regular intervals.

Shopify, an ecommerce company, uses leaky buckets for rate-limiting [7].

Pros:

- Memory efficient given the limited queue size.

- Requests are processed at a fixed rate therefore it is suitable for use cases that a stable

outflow rate is needed.

Cons:

- A burst of traffic fills up the queue with old requests, and if they are not processed in time, recent requests will be rate limited.

- There are two parameters in the algorithm. It might not be easy to tune them properly.

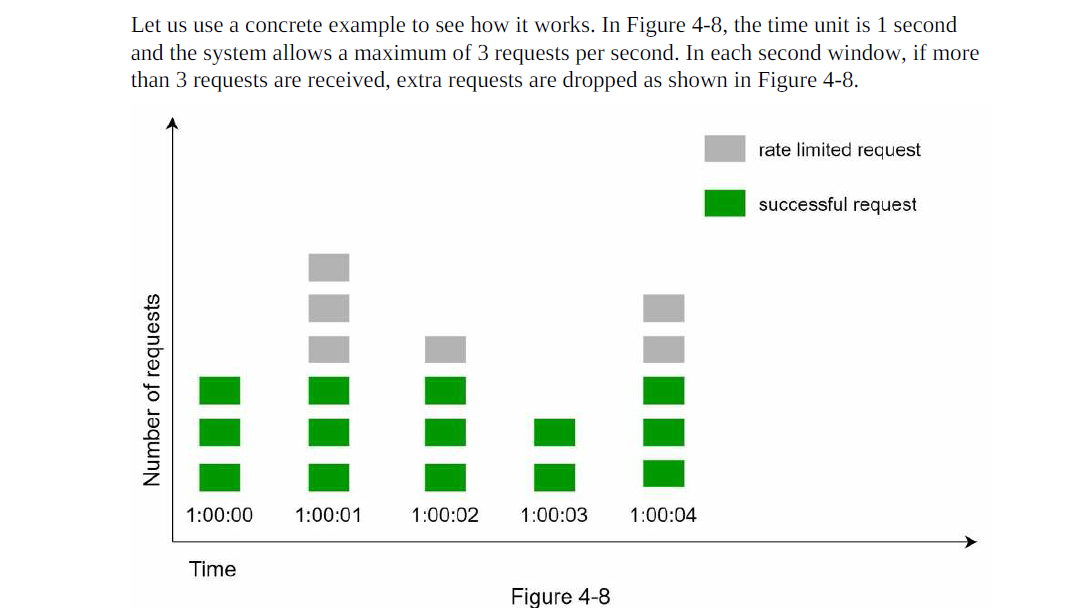

Fixed window counter

Working –

Fixed window counter algorithm works as follows:

- The algorithm divides the timeline into fix-sized time windows and assign a counter for

each window. - Each request increments the counter by one.

- Once the counter reaches the pre-defined threshold, new requests are dropped until a new

time window starts.

Pros:

- Memory efficient.

- Easy to understand.

- Resetting available quota at the end of a unit time window fits certain use cases.

Cons:

- Spike in traffic at the edges of a window could cause more requests than the allowed

quota to go through.

Sliding window log

As discussed previously, the fixed window counter algorithm has a major issue: it allows

more requests to go through at the edges of a window. The sliding window log algorithm

fixes the issue. It works as follows:

- The algorithm keeps track of request timestamps. Timestamp data is usually kept in

cache, such as sorted sets of Redis [8]. - When a new request comes in, remove all the outdated timestamps. Outdated timestamps

are defined as those older than the start of the current time window. - Add timestamp of the new request to the log.

- If the log size is the same or lower than the allowed count, a request is accepted.

Otherwise, it is rejected.

Pros:

- Rate limiting implemented by this algorithm is very accurate. In any rolling window,

requests will not exceed the rate limit.

Cons: - The algorithm consumes a lot of memory because even if a request is rejected, its

timestamp might still be stored in memory.

Sliding window counter

The sliding window counter algorithm is a hybrid approach that combines the fixed window

counter and sliding window log.

Pros

- It smooths out spikes in traffic because the rate is based on the average rate of the

previous window. - Memory efficient.

Cons - It only works for not-so-strict look back window. It is an approximation of the actual rate

because it assumes requests in the previous window are evenly distributed. However, this

problem may not be as bad as it seems. According to experiments done by Cloudflare ,

only 0.003% of requests are wrongly allowed or rate limited among 400 million requests.