Index

- Introduction

- Dijkstra Algorithm

- Bellman-ford Algorithm

- Floyd-Warshall Algorithm

- Prim’s Algorithm

Introduction

Common Terminology –

- Minimum Spanning Tree (MST) used in prims algorithm

- Goal: To connect all vertices in a graph with the minimum possible total edge weight, without forming any cycles.

- Output: A tree that spans all vertices of the graph with the minimum sum of edge weights.

- Shortest path

- Goal: To find the shortest path between a specific pair of vertices in a graph, minimizing the sum of the edge weights along that path.

- Output: A path (which may include some or all vertices) with the minimum possible total weight between two specific vertices.

Dijkstra Algorithm

- Purpose: Finds the shortest path from a single source to all other vertices in a graph.

- Applicability: Works only with graphs that have non-negative edge weights.

- Woking:

- Uses a greedy approach.

- Iteratively selects the vertex with the smallest known distance, explores its neighbors, and updates their distances.

- Once a vertex is processed, its shortest path is finalised.

Dijkstra’s algorithm is like breadth-first search (BFS), except we use a priority queue instead of a normal first-in-first-out queue. Each item’s priority is the cost of reaching it.

Note-Implementation is similar to Prim’s algorithm for minimum spanning tree.

REf-

- https://www.interviewcake.com/concept/java/dijkstras-algorithm (desciption working in chart)

- https://www.tutorialcup.com/interview/algorithm/dijkstra-algorithm.htm (application + short implemntation)

- https://www.interviewbit.com/tutorial/dijkstra-algorithm/ (Pseudo code + Graph Algo)

- https://www.interviewbit.com/tutorial/breadth-first-search/#breadth-first-search

Bellman-Ford Algorithm

- Purpose: Also finds the shortest path from a single source to all other vertices, but can handle graphs with negative edge weights.

- Applicability: Works with graphs that may have negative edge weights, and can also detect negative weight cycles (where a cycle’s total weight is negative).

- Woking:

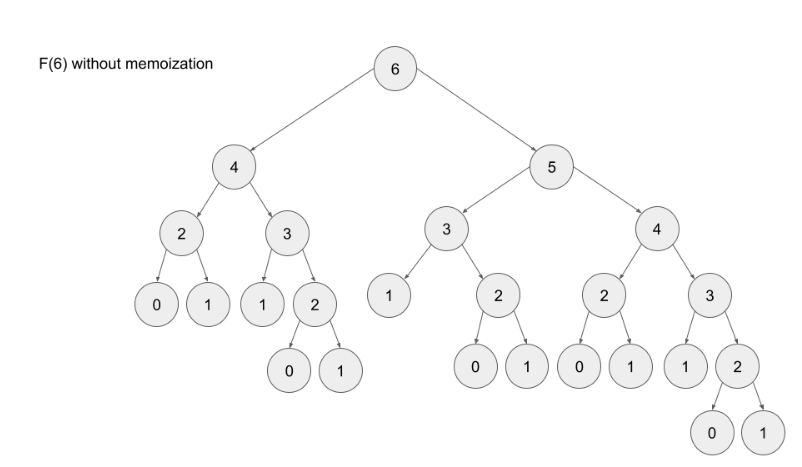

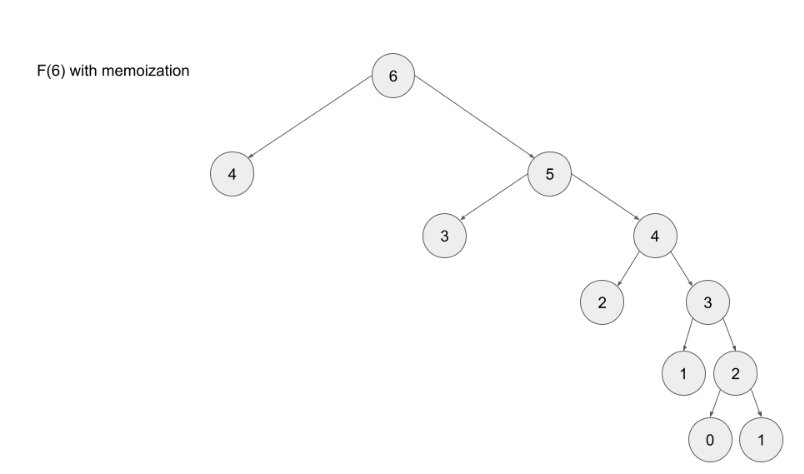

- Uses a dynamic programming approach.

- Iteratively relaxes all edges, meaning it updates the shortest path estimate for each edge based on its current known distance.

- After V−1V-1V−1 iterations, it ensures that the shortest paths are found. If an additional relaxation improves any distance, it indicates a negative weight cycle.

Floyd-Warshall Algorithm

- Purpose: Finds the shortest paths between all pairs of vertices in a graph.

- Applicability: Works with both directed and undirected graphs, including those with negative edge weights.

- Wokings:

- Uses a dynamic programming approach.

- Iteratively updates a distance matrix where each entry

dist[i][j]represents the shortest path from vertexito vertexj. - Considers each vertex as an intermediate point in the path and updates the matrix accordingly.

Prim’s Algorithm

- Purpose: Finds the Minimum Spanning Tree (MST) of a connected, undirected graph. The MST is a subset of the edges that connects all vertices together, without any cycles, and with the minimum possible total edge weight.

- Applicability: Works with weighted, undirected graphs. It requires that the graph be connected, meaning there must be a path between every pair of vertices.